计算物理 ›› 2023, Vol. 40 ›› Issue (6): 742-751.DOI: 10.19596/j.cnki.1001-246x.8684

收稿日期:2022-12-19

出版日期:2023-11-25

发布日期:2024-01-22

通讯作者:

马中华

作者简介:刘贵鑫(1997-), 女, 硕士研究生, 主要研究方向为高性能计算与并行计算, E-mail: 0221201012@tute.edu.cn

基金资助:

Guixin LIU( ), Zhonghua MA(

), Zhonghua MA( )

)

Received:2022-12-19

Online:2023-11-25

Published:2024-01-22

Contact:

Zhonghua MA

摘要:

为了提高断层识别的准确率, 提出改进Unet模型。为编码器部分设计一种多分支的并联结构M-block(Multi-branch block), 它可以捕获多尺度上下文信息, 并且多分支的并联结构会带来高性能收益。在解码器部分加入Self-Attention块和注意力门控机制。Self-Attention通过对输入特征上下文的加权平均操作, 不仅使注意力模块能够灵活地关注图像的不同区域, 而且弥补了CNN(Convolutional Neural Network)网络局部性的缺点, 为神经网络带来更多的可能性。通过合成数据和实际数据证实, 该模型将传统卷积中的权值共享优点和Self-Attention动态计算注意力权重的优点结合, 提高了断层识别的精度, 与Unet相比, 验证损失下降了33.68%。模型不仅准确识别出了断层特征, 且比目前流行的深度学习方法更准确。

中图分类号:

刘贵鑫, 马中华. 改进Unet网络对叠后地震数据的断层识别[J]. 计算物理, 2023, 40(6): 742-751.

Guixin LIU, Zhonghua MA. Fault Identification of Post Stack Seismic Data by Improved Unet Network[J]. Chinese Journal of Computational Physics, 2023, 40(6): 742-751.

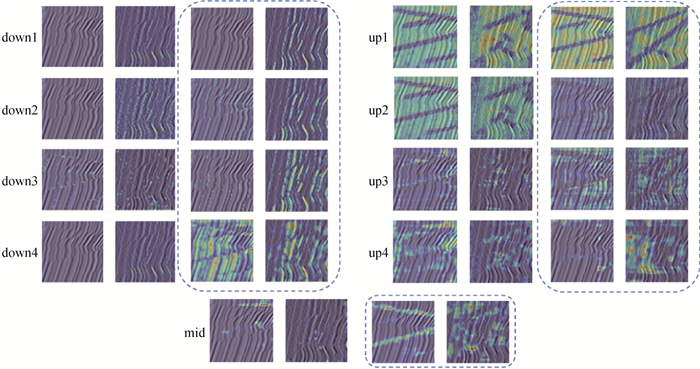

图6 左侧为Unet网络的特征图,蓝色虚线框内的为加入Self-Attention后M-SA-Unet的特征图

Fig.6 The feature diagram of the Unet network on the left, and the feature diagram of M-SA-Unet after adding Self-Attention in the blue dotted box

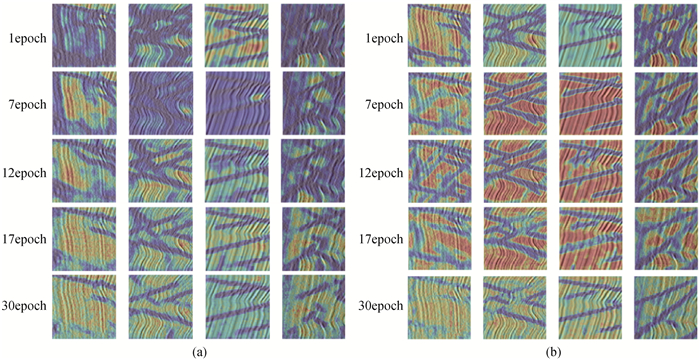

图7 (a) 从Unet网络选择的5个epoch特征图; (b)改进M-SA-Unet网络的特征图

Fig.7 (a) Five epoch feature diagrams selected from the Unet network, and (b) the feature diagram of the improved M-SA-Unet network

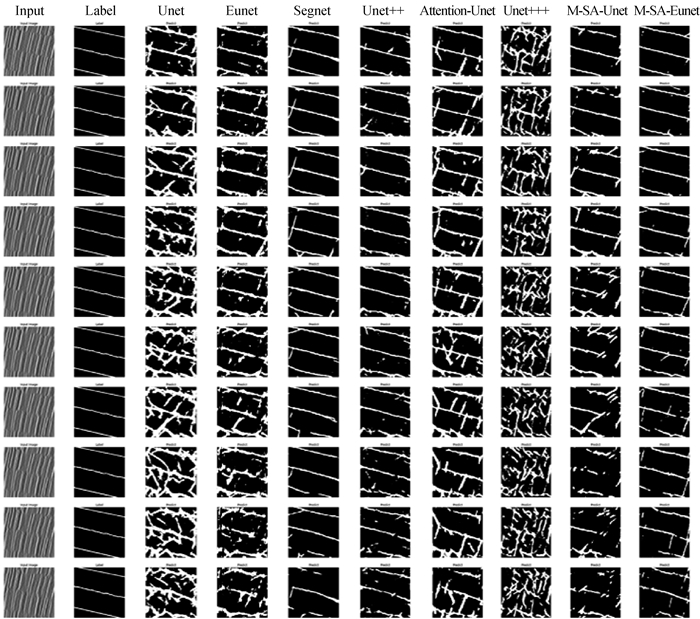

图10 在预测帧上M-SA-Unet和M-SA-Eunet与其他模型断层识别的比较

Fig.10 Comparison of M-SA-Unet and M-SA-Eunet with other model fault recognition results on the forecast frame

| Train Loss | Val Loss | Epoch | Batchsize | 优化器 | 学习率 | 训练miou | 测试miou | |

| Unet | 0.078 | 0.095 | 30 | 16 | AdamW | 0.001 | 0.831 | 0.800 |

| Eunet | 0.058 | 0.091 | 30 | 16 | AdamW | 0.001 | 0.873 | 0.801 |

| Segnet | 0.055 | 0.069 | 30 | 16 | AdamW | 0.001 | 0.890 | 0.875 |

| Unet++ | 0.035 | 0.065 | 30 | 16 | AdamW | 0.001 | 0.911 | 0.904 |

| Unet+++ | 0.065 | 0.113 | 30 | 8 | AdamW | 0.001 | 0.848 | 0.749 |

| Attention-Unet | 0.055 | 0.076 | 30 | 16 | AdamW | 0.001 | 0.911 | 0.877 |

| M-SA-Unet | 0.033 | 0.062 | 30 | 16 | AdamW | 0.001 | 0.914 | 0.882 |

| M-SA-Eunet | 0.034 | 0.063 | 30 | 16 | AdamW | 0.001 | 0.926 | 0.911 |

表1 在训练和验证过程中各项参数的设置和结果

Table 1 Sets and results of parameters in the training and verification process

| Train Loss | Val Loss | Epoch | Batchsize | 优化器 | 学习率 | 训练miou | 测试miou | |

| Unet | 0.078 | 0.095 | 30 | 16 | AdamW | 0.001 | 0.831 | 0.800 |

| Eunet | 0.058 | 0.091 | 30 | 16 | AdamW | 0.001 | 0.873 | 0.801 |

| Segnet | 0.055 | 0.069 | 30 | 16 | AdamW | 0.001 | 0.890 | 0.875 |

| Unet++ | 0.035 | 0.065 | 30 | 16 | AdamW | 0.001 | 0.911 | 0.904 |

| Unet+++ | 0.065 | 0.113 | 30 | 8 | AdamW | 0.001 | 0.848 | 0.749 |

| Attention-Unet | 0.055 | 0.076 | 30 | 16 | AdamW | 0.001 | 0.911 | 0.877 |

| M-SA-Unet | 0.033 | 0.062 | 30 | 16 | AdamW | 0.001 | 0.914 | 0.882 |

| M-SA-Eunet | 0.034 | 0.063 | 30 | 16 | AdamW | 0.001 | 0.926 | 0.911 |

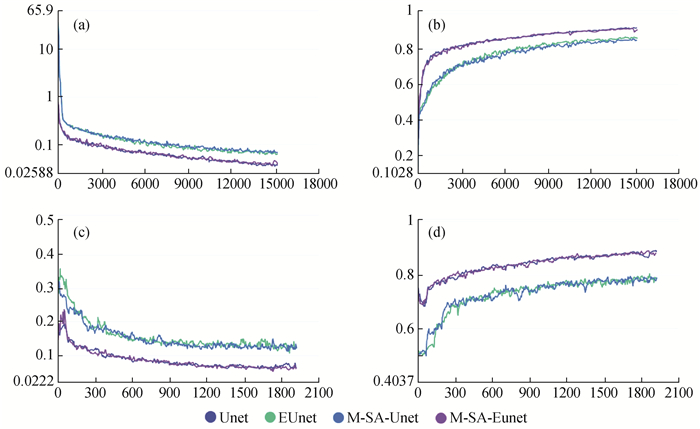

图11 Unet,Eunet,M-SA-Unet和M-SA-Eunet的(a)训练损失、(b)验证损失、(c)训练miou和(d)验证miou

Fig.11 Unet, Eunet, M-SA-Unet and M-SA-Eunet (a) training loss, (b) validation loss, (c) training miou and (d) validation miou

| 1 |

YUAN Y . Some new progress in the fields of computational petroleum geology and others[J]. Chinese Journal of Computational Physics, 2003, (4): 283- 290.

DOI |

| 2 | RONNEBERGER O, FISCHER P, BROX T. U-net: Convolutional networks for biomedical image segmentation[M]//Lecture Notes in Computer Science. Cham: Springer International Publishing, 2015: 234-241. |

| 3 | ZHANG H, GOODFELLOW I, METAXAS D, et al. Self-attention generative adversarial networks[C]//International Conference on Machine Learning. PMLR, 2019: 7354-7363. |

| 4 | 何易龙, 文晓涛, 王锦涛, 等. 基于3D U-Net++L~3卷积神经网络的断层识别[J]. 地球物理学进展, 2022, 37 (2): 607- 616. |

| 5 | 芦凤明, 孟瑞刚, 张军华, 等. UNet++和迁移学习相结合的复杂断裂识别方法研究[J]. 地球物理学进展, 2022, 37 (3): 1100- 1111. |

| 6 | ZHOU Z W, RAHMAN S M M, TAJBAKHSH N, et al. UNet++: A nested U-net architecture for medical image segmentation[M]//Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Cham: Springer International Publishing, 2018: 3-11. |

| 7 | 席英杰, 李克文, 徐延辉, 等. 一种用于地震断层图像识别的SPD-UNet模型[J]. 计算机工程, 2021, 47 (12): 249- 255. |

| 8 | 杨晶, 丁仁伟, 林年添, 等. 基于深度学习的地震断层智能识别研究进展[J]. 地球物理学进展, 2022, 37 (1): 298- 311. |

| 9 |

WANG J , ZHANG J H , ZHANG J L , et al. Research on fault recognition method combining 3D Res-UNet and knowledge distillation[J]. Applied Geophysics, 2021, 18 (2): 199- 212.

DOI |

| 10 | 周东红, 李辉, 阎建国. CNN标签数据迭代优化及在小断层识别中的应用[J]. 地球物理学进展, 2022, 37 (1): 338- 347. |

| 11 |

WU X , LIANG L , SHI Y , et al. FaultSeg3D: Using synthetic data sets to train an end-to-end convolutional neural network for 3D seismic fault segmentation[J]. Geophysics, 2019, 84 (3): IM35- IM45.

DOI |

| 12 | SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the inception architecture for computer vision[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016: 2818-2826. |

| 13 |

XU X J , LIU C , ZHENG Y Y . 3D tooth segmentation and labeling using deep convolutional neural networks[J]. IEEE Transactions on Visualization and Computer Graphics, 2019, 25 (7): 2336- 2348.

DOI |

| 14 | LU X , HUANG H , LI S , et al. Salt-body classification method based on U-Net[J]. Chinese Journal of Computational Physics, 2020, 37 (3): 327- 334. |

| 15 | 娄莉, 张丰侠, 韩柏迅. 基于图像分割的盐丘识别算法研究[J]. 计算物理, 2023, 40 (4): 511- 518. |

| 16 | ÇIÇEK Ö, ABDULKADIR A, LIENKAMP S S, et al. 3D U-net: Learning dense volumetric segmentation from sparse annotation[M]//Medical Image Computing and Computer-assisted Intervention-MICCAI 2016. Cham: Springer International Publishing, 2016: 424-432. |

| 17 | LI X M , CHEN H , QI X J , et al. H-DenseUNet: Hybrid densely connected UNet for liver and tumor segmentation from CT volumes[J]. IEEE Transactions on Medical Imaging, 2018, 37 (12): 2663- 2674. |

| 18 | BADRINARAYANAN V , KENDALL A , CIPOLLA R . SegNet: A deep convolutional encoder-decoder architecture for image segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39 (12): 2481- 2495. |

| 19 | HUANG H M, LIN L F, TONG R F, et al. UNet 3: A full-scale connected UNet for medical image segmentation[C]//ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020: 1055-1059. |

| 20 | SZEGEDY C, LIU W, JIA Y Q, et al. Going deeper with convolutions[C]//2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015: 1-9. |

| 21 | ASHISH V , NOAM S , NIKI P , et al. Attention is all you need[J]. Advances in Neural Information Processing Systems, 2017, 30, 5998- 6008. |

| [1] | 郭城, 程鹏丹, 王亚辉. 修正模板改进的五阶WENO-Z+格式[J]. 计算物理, 2023, 40(6): 666-676. |

| [2] | 李鹏迪, 刘俊, 郑淇蓉, 张传国, 李永钢, 张永胜, 赵高峰, 曾雉. 硅中硼离子注入的带电缺陷动力学模拟[J]. 计算物理, 2021, 38(3): 361-370. |

| [3] | 刘涛, 杨子义, 陈雨青, 高涛. 重费米子超导PuMGa5(M=Co,Rh)结构、电子和热力学性质的第一性原理研究[J]. 计算物理, 2021, 38(1): 106-112. |

| [4] | 王书松, 张素英. 谐振子势与高斯势联合势阱中玻色爱因斯坦凝聚体的巨涡旋态[J]. 计算物理, 2021, 38(1): 113-119. |

| [5] | 刘永智, 解立强, 梁盛德, 朱开礼, 席忠红, 袁方强. C60对细胞膜潜在生物毒性的分子动力学模拟[J]. 计算物理, 2020, 37(4): 479-487. |

| [6] | 魏志超, 王能平. 介质中双缺陷电荷对碳纳米管场效应晶体管量子输运特性的影响[J]. 计算物理, 2020, 37(3): 352-364. |

| [7] | 张雪颖, 冯琳. C掺杂Mn3Ge的电子结构和磁性[J]. 计算物理, 2019, 36(6): 742-748. |

| [8] | 任娟, 张宁超, 刘萍萍. 碳气凝胶储氢性能的理论研究[J]. 计算物理, 2019, 36(6): 749-756. |

| [9] | 唐攀飞, 郑淇蓉, 李敬文, 魏留明, 张传国, 李永钢, 曾雉. 计及级联内缺陷空间关联的团簇动力学模拟[J]. 计算物理, 2019, 36(5): 586-594. |

| [10] | 尹海峰, 曾春花, 陈文经. 二维二元碳化硅纳米结构的等离激元激发[J]. 计算物理, 2019, 36(5): 603-609. |

| [11] | 马文杰, 张平. 计算顺磁-铁磁相变临界磁场的一种方法[J]. 计算物理, 2019, 36(4): 421-426. |

| [12] | 莫康信, 苏佳佳. 磁性纳米颗粒系统偶极相互作用的Monte Carlo研究[J]. 计算物理, 2019, 36(3): 335-341. |

| [13] | 张海燕, 殷新春. 简单金属固液界面固化过程生长机制的分子动力学研究[J]. 计算物理, 2019, 36(1): 80-88. |

| [14] | 马瑞, 张华林. 掺杂菱形BN片的石墨烯纳米带的电子特性[J]. 计算物理, 2019, 36(1): 99-105. |

| [15] | 周康, 冯庆, 田芸, 李科, 周清斌. 过渡金属Cu、Cr掺杂TiO2表面氧化性气体NO2光学气敏传感特性[J]. 计算物理, 2018, 35(6): 702-710. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||

版权所有 © 《计算物理》编辑部

地址:北京市海淀区丰豪东路2号 邮编:100094 E-mail:jswl@iapcm.ac.cn

本系统由北京玛格泰克科技发展有限公司设计开发